Projects, Software

Varnish + Etcd + Fleet

In my previous post it showed how to setup a 3 node backend cluster, almost automatically.

Now I want to show you how I am using it.

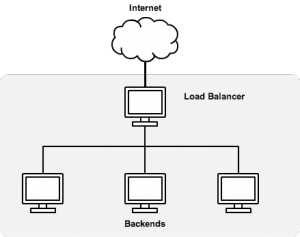

Let us extend this with a front-end, or a load-balancer. Single machine the will redirect our requests to any of the nodes.

I am opting to use Ubuntu, Varnish and Etcd for the front-end machine. (You can also add Nginx if you need to handle https traffic). In the following diagram it is labeled "Load Balancer".

You can easily adapt this guide to however many nodes that you will need. Whether 2 or 20.

Requirements

- Setup a cluster of CoreOS nodes

- Install Ubuntu Server 14.04 that will act as Load Balancer

- Install Varnish

- Install Etcd

- Install ksh shell (apt-get install ksh)

Now that you have all the packages installed, we can begin.

Lets setup the varnish config:

mkdir /etc/varnish/config.d

touch /etc/varnish/config.d/backends.vcl

touch /etc/varnish/config.d/director.vcl

nano /etc/varnish/default.vcl

# Available backends

include "/etc/varnish/config.d/backends.vcl";

include "/etc/varnish/config.d/directors.vcl";

sub vcl_recv {

# Don't caching anything at the moment

return (pass);

}

Create process_etcd_change.sh

touch /root/process_etcd_change.sh chmod +x /root/process_etcd_change.sh nano /root/process_etcd_change.sh #!/bin/ksh -e etcdctl ls /varnish/backends --recursive | /root/sync_varnish.sh sleep 30

Create sync_varnish.sh

touch /root/sync_varnish.sh

chmod +x /root/sync_varnish.sh

nano /root/sync_varnish.sh

#!/bin/ksh -e

echo "" > /etc/varnish/config.d/backends.vcl

echo "" > /etc/varnish/config.d/directors.vcl

while read line ; do

echo $line | sed -e "s/[/:]/ /g" | while read -r varnish backends type host port ; do

[ -z "$host" ] && continue

[ -z "$port" ] && continue

host_underscore=${host//-/_}

type_without_dash=${type//-/}

backend="${type}_${host_underscore}"

echo "backend ${backend} {

.host = \"${host}\";

.port = \"${port}\";

.connect_timeout = 5s;

.first_byte_timeout = 5s;

.between_bytes_timeout = 2s;

.probe = {

.request =

\"GET /probe.txt HTTP/1.1\"

\"Host: ${host}\"

\"Connection: close\";

.timeout = 2s;

.interval = 3s;

.window = 10;

.threshold = 8;

}

}

" >> /etc/varnish/config.d/backends.vcl

case $type in

project)

project +=($backend)

;;

esac

done

done

echo "director project round-robin {" >> /etc/varnish/config.d/directors.vcl

for i in "${project[@]}"

do

echo " {

.backend = ${i};

}" >> /etc/varnish/config.d/directors.vcl

done

echo "}

" >> /etc/varnish/config.d/directors.vcl

echo "}

" >> /etc/varnish/config.d/directors.vcl

service varnish reload

Add the following to /etc/rc.local, just before the 'exit 0'. (If you have etcd here, then after that).

etcdctl exec-watch --forever --recursive /varnish/backends/ "flock -n /var/lock/sync_varnish.lock /root/process_etcd_change.sh"

So what's going on here?

Basically etcdctl is watching for changes to the backend value in the store, and if it changes fires off the above scripts.

Their job is to grab all the backends from etcd and add them to varnish.

All the above uses varnish for is a simple load-balancer, but if are a bit more creative you can do so much more.

You could even have different types of backend, and rules governing which one to use.

Short Comings

- to change service in cloud-init, you need to remove them from fleet and re-add. [suggested solution]

- need to reboot cluster manually get docker container updates. [suggested solution]